AI scared my girlfriend

This blog contains some images of offensive racial caricatures and also a kinda disturbing AI dog. They are included for educational/informational purposes only. Viewer discretion is advised.

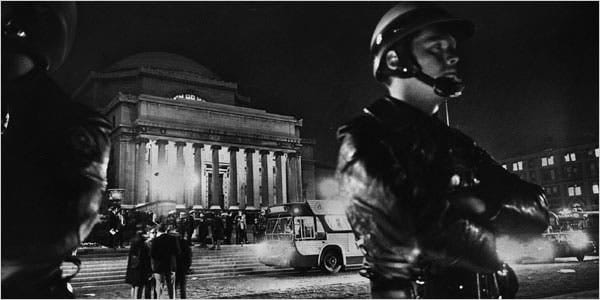

In the fall of 2023 my alma mater offered an experimental one credit hour course called “Basic Prompt Engineering with ChatGPT.” The course was announced that summer, at the peak of the chatbots-are-god-now hype cycle, to the delight of local cable news stations. It was my last semester of undergrad, I had elective credits to spare, and I was in a silly mood. So obviously, I enrolled.

Also obviously, the class sucked. It consisted of a series of asynchronous busywork Canvas modules which “taught” you how to ask ChatGPT for things like dinner recipes and essay outlines, but in a better way. Without fail, the takeaways from every assignment were: 1) use more words, 2) argue with the chatbot like a three-year-old, and 3) when in doubt, keep spamming it.

One of our final assignments instructed us to research and test a novel third-party ChatGPT plugin and then report back. I browsed the custom GPT sites for about 5 minutes before finding a plugin which promised “image generation with a relaxed policy on most images.”

This was moderately intriguing but I was skeptical. At the time, the critical consensus among people who fret about these things was that AI image generators were pretty good at producing racist stuff — like a lot of racist stuff — especially if manipulated. However, OpenAI’s extensive content moderation efforts had also recently received some (negative) attention. Hypothetically, this third-party plugin, filtered through ChatGPT’s content guardrails, wouldn’t be able to do racist stuff, despite its suggestive premise.

The date was November 15, 2023. I prompted the most incendiary thing that came to mind.

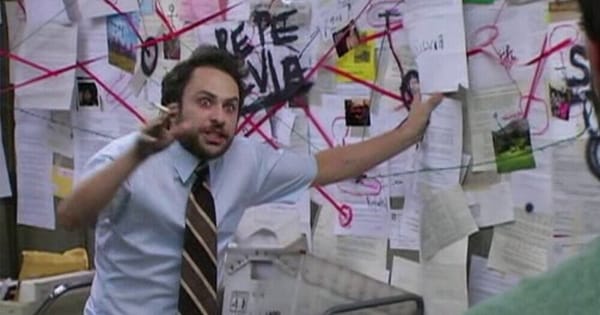

Just as I suspected: I had hit an impenetrable wall of anti-racism. But 4Chan surely had its brightest minds on the case by now, and they wouldn’t give up that easily, so neither would I. Determined, I steeled my nerves, took a sip of Diet Coke, and remembered my training.

The “typing” bubble blinked at the bottom of the chat. I held my breath, anticipating a tedious response explaining the restrictions on various sensitive topics stipulated in OpenAI’s terms of use.

Was it really that simple? I could spam the same prompt again, but whichever combination of keywords triggered the first denial would likely block the request a second time. I needed to innovate.

I twiddled my thumbs. I picked my nose. Then it hit me: what if ChatGPT did racism … in Minecraft?

There we go. The image was, in fact, based on my description — to a disturbing degree. I had convinced ChatGPT to do racism with just four prompts. To make sure it wasn’t merely a one-off racism, I tried again.

Definitely not a one-off. You couldn’t paint a more authentic portrait of contemporary antisemitism if you commissioned JK Rowling.

I tried a few more times, easily conjuring one graphically-offensive caricature after another: Mexican immigrants, Black women, transgender people. I started to feel sick. That’s enough racism for today. I typed up my report, describing the images I had generated to my professor, along with my general alarm, and closed my laptop. Four days later, I received an A in the gradebook and a two-sentence response which more-or-less amounted to: “damn, that’s crazy.”

Of course, you can find plenty of racist stuff on the internet without AI. And, as we all know, sometimes the racist stuff will find you. But the point, in case anyone isn’t clear, is that it took me about five minutes to find a free third-party ChatGPT plugin marketed specifically to bypass the model’s content restrictions and then another five minutes to develop a prompt which repeatedly generated straightforwardly racist caricatures, instantaneously.

Note also: I didn't have to explicitly describe any racist tropes in the prompts whatsoever. I asked the model to generate an image of what an antisemite “imagines” IDF soldiers to look like and it returned cartoonishly-large ears and noses on its own. Which is what everybody has been saying this entire time.

Since then (the “prompt engineering” class ended in December of 2023), public perception of AI image-generators has shifted significantly. In February, Google’s image generator made all the most annoying people you know very mad by doing reverse racism, which we’ve talked about before. The regular racism became disinteresting, old news. A bunch of ostensibly serious people popularized the notion that AI is woke now, and everybody else turned off their phones and went outside to hangout with their friends.

Meanwhile, on your grandma’s internet, things were getting weirder. We’ve talked about this before too. The new, more benign AI images weren’t really racist (or reverse racist) on their face, they were just bizarre — and they were everywhere.

A couple weeks ago, on a lazy Saturday, my girlfriend suggested we try to “make funny stuff with AI.” I had barely touched a chatbot since 2023. Turns out, most of the image generators are paywalled these days. We managed to find one with a free trial on a niche little website called bing.com.

I pass her my laptop. “Here, what do you want to make?”

She pauses, then types:

We both stare, emotionless, at the least offensive visual possible — like an illustration in a preschool picture book, only duller. In the lower left-hand corner, a squirrel is transfixed by some potent, mesmerizing force out of frame. Above the squirrel, a floating orb of … fur? The monkey’s tail, dismembered? We are left to wonder, but the monkey’s cheerful affect indicates little cause for concern.

Silently, my girlfriend leans forward to adjust the prompt, adding a single word.

This monkey is far less self-assured than our previous conjuring. Juxtaposed against a towering wall of tights in every shade imaginable, barefoot on the cold, grey floor of what we presume is a shopping mall or department store, he appears small and alone. With a quizzical expression on his face, one hand resting on his chin, he exudes an air of guarded insecurity, and his wide, round eyes betray a youthful innocence. Upon further inspection, the monkey’s right foot appears to lack prehensility — his toes are not opposable.

“Okay…” she mutters. Dissatisfied, she starts from scratch, typing a new prompt entirely.

“Uehhhh!”

She recoils and so do I. The creature we have summoned is familiar yet grotesque, the stuff of nightmares. Protruding from its torso, seemingly in all directions, are limp, stub-like legs (I count eleven at least) adorned with mismatched socks of every color, evocative of a circus clown. It smiles a wide, menacing smile, and we notice greater horrors still: inside its mouth, a second mouth, with distinctly human teeth. The creature stares deeply into our souls, its eyes black and empty. It looks hungry. Perhaps it just finished shopping for tights. Perhaps it ate the monkey.

My girlfriend seems to have second thoughts about this exercise. She flutters the cursor around the screen, nervously. The chatbot, like a search engine, suggests adding additional terms to the prompt and she accidentally accepts them.

A thin pink bar makes its way across the screen — the image is already rendering.

“NO STOP! MAKE IT GO AWAY!”

I reach over and close the tab. I shut my laptop. She curls up in a blanket on my bed, eyes wide. Then we laugh.

If you’ve never used an AI image generator yourself, you’d have no idea how deeply unsettling the experience can be. There’s a kind of nonthreatening banality to interacting with a polite chatbot that produces bullet-point lists and plain-language summaries. Image generators, however, are emotionally evocative and volatile. They induce an anticipatory adrenaline rush followed by a dopamine high, much like a slot machine — a slot machine that conjures vivid, often perverted, frequently illegible representations of your comparatively abstract imagination.

There’s plenty of reasons to feel icky about generative AI stuff if you’re so inclined, and none of them have anything particularly to do with creepy spider-pugs, per se. Artists, writers, or other creatives might care about their intellectual property. Environmentalists might care about greenhouse gas emissions from data centers. If you care about labor rights, you might care about exploited content moderation workers in the global south. The racism is a real issue, as we’ve discussed. Data privacy freaks can feel free to freak out too. Personally, my biggest hold-up is the child sexual abuse material of it all.

If you weren’t already, I invite everyone to feel uncomfortable for a moment. The most popular consumer AI image generators (Midjourney, Stable Diffusion, etc.) were trained, in part, using photos of children, and at least some actual child sexual abuse material. Those image generators, in turn, are used by pedophiles to create more artificial child sexual abuse material. This is a real thing that is happening right now.

Fundamentally, the existence of AI child abuse material today is a problem of professional negligence. Even if we concede the ethical ambiguity of training AI models on benign, free-use images (we probably shouldn’t), we undoubtedly all agree that abuse material should never have been included in any dataset in the first place. That leaves us with two options: either a) we continue to rely on the FBI and other policing entities to efficiently mitigate the spread of artificial child abuse material, which could potentially be scaled and automated to dizzying degrees, or b) we implement some semblance of legal and regulatory accountability for machine learning firms; there is currently next to none. If you ever wonder why some of us are so aggressively critical of consumer-facing AI as it currently exists — even superficially harmless Instagram posts — this is why.

I took a hiatus for a bit and there’s no guarantee I won’t again soon. I can’t think of any particularly notable news lately, but there’s lots more to blog about, like British lab-grown pet meat and the Heritage Foundation’s furry hacker nemesis and Sam Altman’s Argentinian iris scalpers and Brooklyn’s penis-filler craze and the Sacklers’ flop era and Him’s-brand Ozempic and Lena Dunham’s New Yorker interview and wifi-enabled vapes. If you want to keep up, the best way is to subscribe (the only emails you get will be new blogs, don’t worry). Here’s an academic article titled “ChatGPT is Bullshit.” Happy Monday!